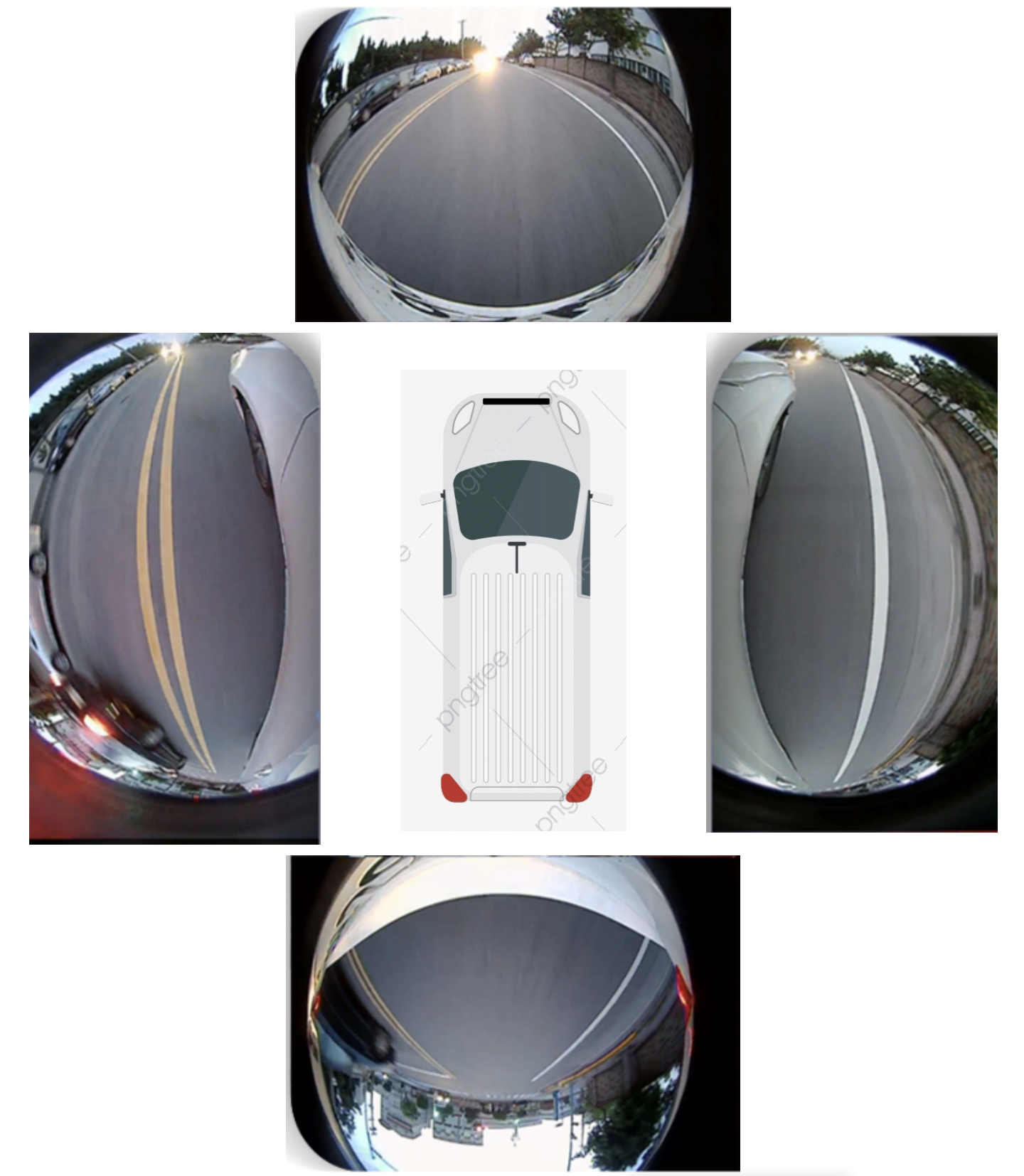

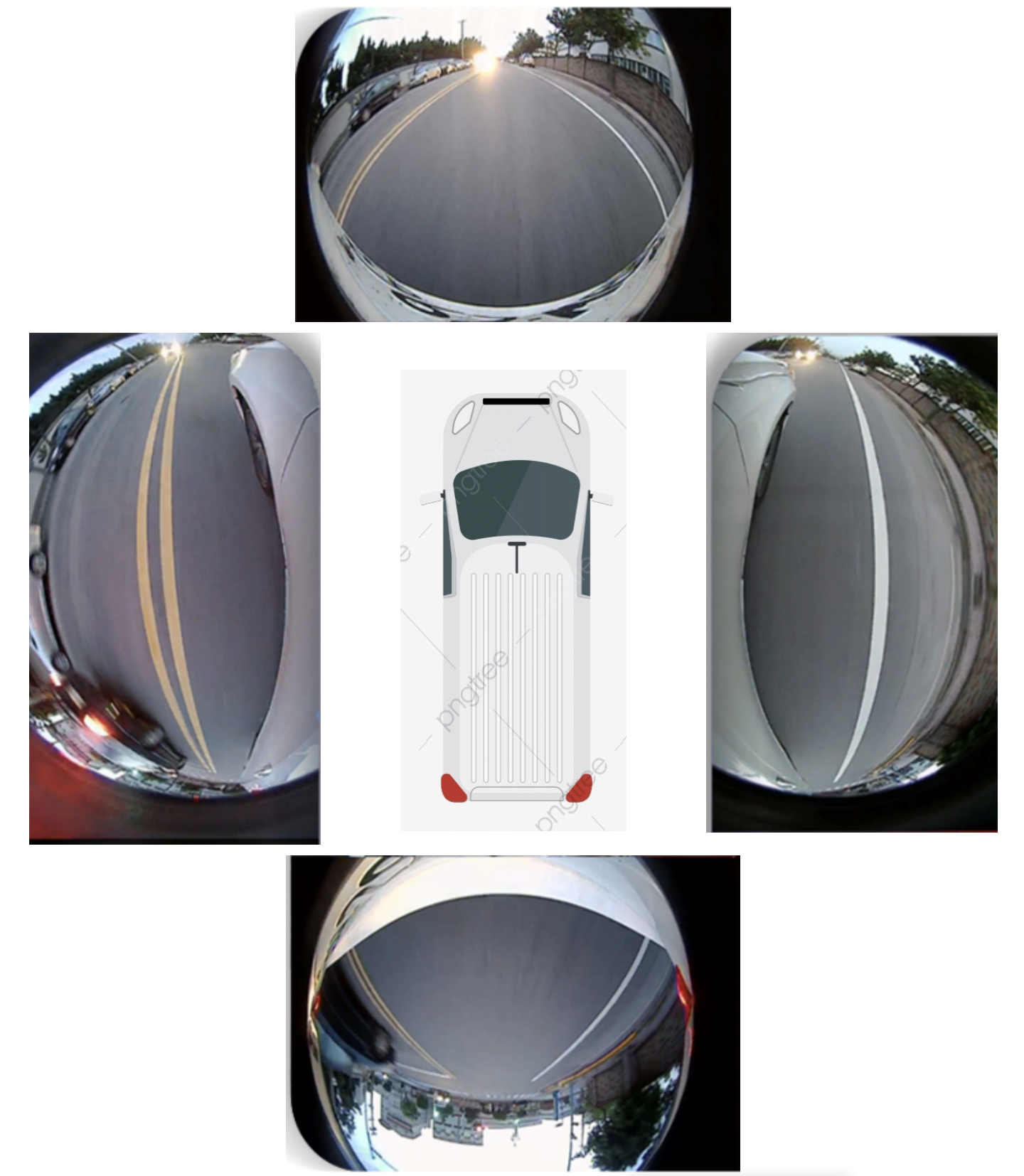

PAIR-LITEON Competition: Embedded AI Object Detection Model Design Contest on Fish-eye Around-view Cameras

Object detection in the computer vision area has been extensively studied and making tremendous progress in recent years using deep learning methods. However, due to the heavy computation required in most deep learning-based algorithms, it is hard to run these models on embedded systems, which have limited computing capabilities. In addition, the existing open datasets for object detection applied in ADAS applications with the 3-D AVM scene usually include pedestrian, vehicles, cyclists, and motorcycle riders in western countries, which is not quite similar to the crowded Asian countries like Taiwan with lots of motorcycle riders speeding on city roads, such that the object detection models training by using the existing open datasets cannot be applied in detecting moving objects in Asian countries like Taiwan.

In this competition, we encourage the participants to design object detection models that can be applied in Asian traffic with lots of fast speeding motorcycles running on city roads along with vehicles and pedestrians. The developed models not only fit for embedded systems but also achieve high accuracy at the same time.

Challenge on Visual Attention Estimation in HMD 2023

To improve the experience of XR applications, techniques of visual attention estimation have been developed for predicting human intention so that the HMD can pre-render the visual content to reduce rendering latency. However, most deep learning-based algorithms have to pay heavy computation to achieve satisfactory accuracy. This is especially challenging for embedded systems with finite resources such as computing power and memory bandwidth (e.g., standalone HMD). In addition, this research field relies on richer data to advance the most cutting-edge progress, while the number and diversity of existing datasets were still lacking. In this challenge, we collected a set of 360° MR/VR videos along with the information of user head pose and eye gaze signals. The goal of this competition is to encourage contestants to design lightweight visual attention estimation models that can be deployed on an embedded device of constrained resources. The developed models need to not only achieve high fidelity but also show good performance on the device.

Detailed information can be found via

https://aidea-web.tw/acmmmasia2023_vae

Organizer:

Min-Chun Hu, National Tsing Hua University

Tse-Yu Pan, Natonal Tawian University of Science and Technology

Herman Prawiro, National Tsing Hua University

CM Cheng, MediaTek

Hsien-kai Kuo, MediaTek

Min-Chun Hu, National Tsing Hua University

Tse-Yu Pan, Natonal Tawian University of Science and Technology

Herman Prawiro, National Tsing Hua University

CM Cheng, MediaTek

Hsien-kai Kuo, MediaTek